Calibration Curve 101

Calibration curves are essential for ensuring the accuracy of force measurements. They are used in a wide variety of test and measurement applications, including quality control, research, and engineering.

Calibration curves are essential for ensuring the accuracy of force measurements. They are used in a wide variety of test and measurement applications, including quality control, research, and engineering.

A calibration curve is a graph that shows the relationship between the output of a measuring instrument and the true value of the quantity being measured. In force measurement, a calibration curve is used to ensure that the force measuring device is performing accurately. In the context of load cell calibration, a calibration curve is a graphical representation of the relationship between the output signal of a load cell and the applied known loads or forces.

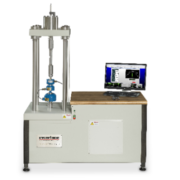

The load cell user will use a known force standard to create the calibration curve. The known force standard is applied to the force measuring device and the output of the instrument is logged via the supporting instrumentation. This process is repeated for a range of known forces.

The calibration curve for a load cell is created by plotting the output signals (typically in voltage or digital units) on the y-axis against the corresponding applied loads or forces on the x-axis. The resulting graph is the calibration curve.

Test and measurement professionals use the calibration curve to convert the load cell output to the true value of the force being measured. The curve helps to establish the relationship between the load and the output signal, and it provides a means to convert the load cell’s output into accurate force or weight measurements. For example, if the output is 100 units when a known force of 100 N is applied, then the calibration curve will show that the measurement using the load cell is accurate to within a certain tolerance.

Benefits of using a calibration curve in force measurement:

- It ensures that the force measuring instrument is accurate and dependable.

- It provides a way to convert the load cell output to the true value of the force being measured.

- It can be used to identify and correct errors, including drift, sensitivity, overload and hysteresis.

- It should be used for traceability and to track the performance of the measurement device over time.

Why does a calibration curve matter when calibrating load cells?

Load cells can be affected by a range of factors, including temperature variations, drift, and environmental conditions. The calibration curve helps identify and compensate for these factors. By periodically calibrating the load cell, any deviations from the original calibration curve can be detected, and appropriate corrections can be made to ensure accurate and reliable measurements.

The calibration curve for a load cell should be linear, indicating a consistent and predictable relationship between the applied load and the output signal. However, load cells may exhibit non-linear behavior, such as sensitivity variations or hysteresis, which can be accounted for and corrected through calibration.

The calibration curve allows for the determination of the load cell’s sensitivity, linearity, and any potential adjustments or corrections needed to improve its accuracy. It serves as a reference to convert the load cell’s output signal into meaningful and calibrated measurements when the load cell is used in practical applications for force or weight measurement.

Calibration curves are an essential tool for ensuring the accuracy of force measurements. They are used in a wide variety of applications, and they offer several benefits. If you engage in using load cells, it is important to understand the importance of calibration curves and how they can help you ensure accurate measurements.

Find all of Interface 101 posts here.

Interface recommends annual calibration on all measurement devices. If you need to request a service, please go to our Calibration and Repair Request Form.

ADDITIONAL RESOURCES

Interface Force Measurement 101 Series Introduction

Extending Transducer Calibration Range by Extrapolation